10 Tips for Better Redux Architecture

When I started using React, there was no Redux. There was only the Flux architecture, and about a dozen competing implementations of it.

Now there are two clear winners for data management in React: Redux and MobX, and the latter isn’t even a Flux implementation. Redux has caught on so much that it’s not just being used for React anymore. You can find Redux architecture implementations for other frameworks, including Angular 2. See ngrx:store, for example.

Side note: MobX is cool, and I’d probably choose it over Redux for simple UIs, because it’s less complicated and less verbose. That said, there are some important features of Redux that MobX doesn’t give you, and it’s important to understand what those features are before you decide what’s right for your project.

Side side note: Relay and Falcor are other interesting solutions for state management, but unlike Redux and MobX, they must be backed by GraphQL and Falcor Server, respectively, and all Relay state corresponds to some server-persisted data. AFAIK, neither offers a good story for client-side-only, transient state management. You may be able to enjoy the benefits of both by mixing and matching Relay or Falcor with Redux or MobX, differentiating between client-only state and server-persisted state. Bottom line: There is no clear single winner for state management on the client today. Use the right tool for the job at hand.

Dan Abramov, the creator of Redux made a couple great courses on the topic:

Both are great step-by-step tutorials which explain Redux basics, but you’ll also need a higher level understanding to get the most from Redux.

The following are tips that will help you build better Redux apps.

1. Understand the Benefits of Redux

There are a couple important goals for Redux that you need to keep in mind:

- Deterministic View Renders

- Deterministic State Reproduction

Determinism is important for application testability and diagnosing and fixing bugs. If your application views and state are nondeterministic, it’s impossible to know whether or not the views and state will always be valid. You might even say that nondeterminism is a bug in itself.

But some things are inherently nondeterministic. Things like the timing of user input and network I/O. So how can we ever know if our code really works? Easy: Isolation.

The main purpose of Redux is to isolate state management from I/O side effects such as rendering the view or working with the network. When side-effects are isolated, code becomes much more simple. It’s a lot easier to understand and test your business logic when it’s not all tangled up with network requests and DOM updates.

When your view render is isolated from network I/O and state updates, you can achieve a deterministic view render, meaning: given the same state, the view will always render the same output. It eliminates the possibility of problems such as race conditions from asynchronous stuff randomly wiping out bits of your view, or mutilating bits of your state as your view is in the process of rendering.

When a newbie thinks about creating a view, they might think, “This bit needs the user model, so I’ll launch an async request to fetch that and when that promise resolves, I’ll update the user component with their name. That bit over there requires the to-do items, so we’ll fetch that, and when the promise resolves, we’ll loop over them and draw them to the screen.”

There are a few major problems with this approach:

- You never have all the data you need to render the complete view at any given moment. You don’t actually start to fetch data until the component starts to do its thing.

- Different fetch tasks can come in at different times, subtly changing the order that things happen in the view render sequence. To truly understand the render sequence, you have to have knowledge of something you can’t predict: the duration of each async request. Pop quiz: In the above scenario, what renders first, the user component or the to-do items? Answer: It’s a race!

- Sometimes event listeners will mutate the view state, which might trigger another render, further complicating the sequence.

The key problem with storing your data in the view state and giving async event listeners access to mutate that view state is this:

Mingling data fetching, data manipulation and view render concerns is a recipe for time-traveling spaghetti.

I know that sounds kinda cool in a B-movie sci-fi kinda way, but believe me, time-traveling spaghetti is the worst tasting kind there is!

What the flux architecture does is enforce a strict separation and sequence, which obeys these rules every time:

- First, we get into a known, fixed state…

- Then we render the view. Nothing can change the state again for this render loop.

- Given the same state, the view will always render the same way.

- Event listeners listen for user input and network request handlers. When they get them, actions are dispatched to the store.

- When an action is dispatched, the state is updated to a new known state and the sequence repeats. Only dispatched actions can touch the state.

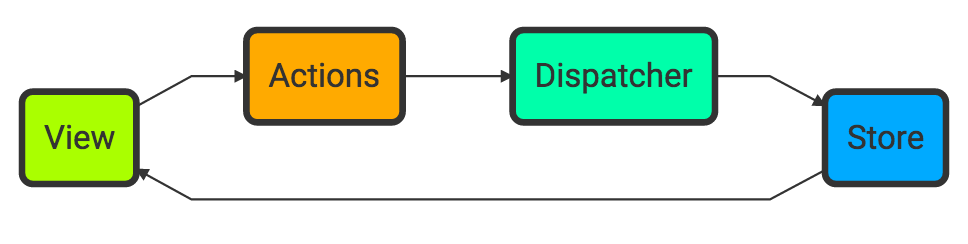

That’s Flux in a nutshell: A one-way data flow architecture for your UI:

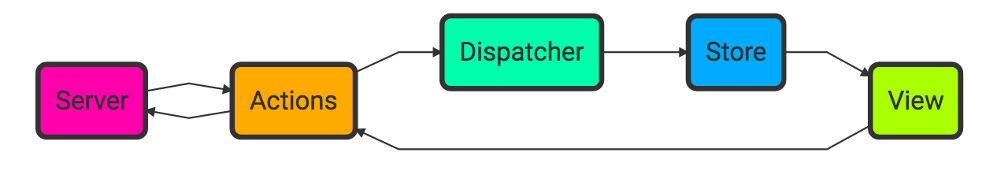

With the Flux architecture, the view listens for user input, translates those into action objects, which get dispatched to the store. The store updates the application state and notifies the view to render again. Of course, the view is rarely the only source of input and events, but that’s no problem. Additional event listeners dispatch action objects, just like the view:

Importantly, state updates in Flux are transactional. Instead of simply calling an update method on the state, or directly manipulating a value, action objects get dispatched to the store. An action object is a transaction record. You can think of it like a bank transaction — a record of the change to be made. When you make a deposit to your bank, your balance from 5 minutes ago doesn’t get wiped out. Instead, a new balance is appended to the transaction history. Action objects add a transaction history to your application state.

Action objects look like this:

{

type: ADD_TODO,

payload: 'Learn Redux'

}

action-object-example.js

What action objects give you is the ability to keep a running log of all state transactions. That log can be used to reproduce the state in a deterministic way, meaning:

Given the same initial state and the same transactions in the same order, you always get the same state as a result.

This has important implications:

- Easy testability

- Easy undo/redo

- Time travel debugging

- Durability — Even if the state gets wiped out, if you have a record of every transaction, you can reproduce it.

Who doesn’t want to have a mastery over space and time? Transactional state gives you time-traveling superpowers:

2. Some Apps Don’t Need Redux

If your UI workflow is simple, all of this may be overkill. If you’re making a tic-tac-toe game, do you really need undo/redo? The games rarely last more than a minute. If the user screws up, you could just reset the game and let them start over.

If:

- User workflows are simple

- Users don’t collaborate

- You don’t need to manage server side events (SSE) or websockets

- You fetch data from a single data source per view

It may be that sequence of events in the app is probably sufficiently simple that the benefits of transactional state are not worth the extra effort.

Maybe you don’t need to Fluxify your app. There is a much simpler solution for apps like that. Check out MobX.

However, as the complexity of your app grows, as the complexity of view state management grows, the value of transactional state grows with it, and MobX doesn’t provide transactional state management out of the box.

If:

- User workflows are complex

- Your app has a large variety of user workflows (consider both regular users and administrators)

- Users can collaborate

- You’re using web sockets or SSE

- You’re loading data from multiple endpoints to build a single view

You could benefit enough from a transactional state model to make it worth the effort. Redux might be a good fit for you.

What do web sockets and SSE have to do with this? As you add more sources of asynchronous I/O, it gets harder to understand what’s going on in the app with indeterminate state management. Deterministic state and a record of state transactions radically simplify apps like this.

In my opinion, most large SaaS products involve at least a few complex UI workflows and should be using transactional state management. Most small utility apps & simple prototypes shouldn’t. Use the right tool for the job.

3. Understand Reducers

Redux = Flux + Functional Programming

Flux prescribes one-way data flow and transactional state with action objects, but doesn’t say anything about how to handle action objects. That’s where Redux comes in.

The primary building block of Redux state management is the reducer function. What’s a reducer function?

In functional programming, the common utility reduce() or fold() is used to apply a reducer function to each value in a list of values in order to accumulate a single output value. Here’s an example of a summing reducer applied to a JavaScript array with Array.prototype.reduce():

const initialState = 0;

const reducer = (state = initialState, data) => state + data;

const total = [0, 1, 2, 3].reduce(reducer);

console.log(total); // 6

simple-reducer.js

Instead of operating on arrays, Redux applies reducers to a stream of action objects. Remember, an action object looks like this:

{

type: ADD_TODO,

payload: 'Learn Redux'

}

action-object-example.js

Let’s turn the summing reducer above into a Redux-style reducer:

const defaultState = 0;

const reducer = (state = defaultState, action) => {

switch (action.type) {

case 'ADD': return state + action.payload;

default: return state;

}

};

summing-reducer.js

Now we can apply it to some test actions:

const actions = [

{ type: 'ADD', payload: 0 },

{ type: 'ADD', payload: 1 },

{ type: 'ADD', payload: 2 }

];

const total = actions.reduce(reducer, 0); // 3

summing-reducer-test-actions.js

4. Reducers Must be Pure Functions

In order to achieve deterministic state reproduction, reducers must be pure functions. No exceptions. A pure function:

- Given the same input, always returns the same output.

- Has no side-effects.

Importantly in JavaScript, all non-primitive objects are passed into functions as references. In other words, if you pass in an object, and then directly mutate a property on that object, the object changes outside the function as well. That’s a side-effect. You can’t know the full meaning of calling the function without also knowing the full history of the object you passed in. That’s bad.

Reducers should return a new object, instead. You can do that with Object.assign({}, state, { thingToChange }), for instance.

Array parameters are also references. You can’t just .push() new items to an array in a reducer, because .push() is a mutating operation. Likewise, so are .pop(), .shift(), .unshift(), .reverse(), .splice(), and any other mutator method.

If you want to be safe with arrays, you need to restrict the operations you perform on the state to the safe accessor methods. Instead of .push(), use .concat().

Take a look at the ADD_CHAT case in this chat reducer:

const ADD_CHAT = 'CHAT::ADD_CHAT';

const defaultState = {

chatLog: [],

currentChat: {

id: 0,

msg: '',

user: 'Anonymous',

timeStamp: 1472322852680

}

};

const chatReducer = (state = defaultState, action = {}) => {

const { type, payload } = action;

switch (type) {

case ADD_CHAT:

return Object.assign({}, state, {

chatLog: state.chatLog.concat(payload)

});

default: return state;

}

};

chat-reducer.js

As you can see, a new object is created with Object.assign(), and we append to the array with .concat() instead of .push().

Personally, I don’t like to worry about accidentally mutating my state, so lately I’ve been experimenting with using immutable data APIs with Redux. If my state is an immutable object, I don’t even need to look at the code to know that the object isn’t being accidentally mutated. I came to this conclusion after working on a team and discovering bugs from accidental state mutations.

There’s a lot more to pure functions than this. If you’re going to use Redux for production apps, you really need a good grasp of what pure functions are, and other things you need to be mindful of (such as dealing with time, logging, & random numbers). For more on that, see “Master the JavaScript Interview: What is a Pure Function?”.

5. Remember: Reducers Must be the Single Source of Truth

All state in your app should have a single source of truth, meaning that the state is stored in a single place, and anywhere else that state is needed should access the state by reference to its single source of truth.

It’s OK to have different sources of truth for different things. For example, the URL could be the single source of truth for the user request path and URL parameters. Maybe your app has a configuration service which is the single source of truth for your API URLs. That’s fine. However…

When you store any state in a Redux store, any access to that state should be made through Redux. Failing to adhere to this principle can result in stale data or the kinds of shared state mutation bugs that Flux and Redux were invented to solve.

In other words, without the single source of truth principle, you potentially lose:

- Deterministic view render

- Deterministic state reproduction

- Easy undo/redo

- Time travel debugging

- Easy testability

Either Redux or don’t Redux your state. If you do it half way, you could undo all of the benefits of Redux.

6. Use Constants for Action Types

I like to make sure that actions are easy to trace to the reducer that employs them when you look at the action history. If all your actions have short, generic names like CHANGE_MESSAGE, it becomes harder to understand what’s going on in your app. However, if action types have more descriptive names like CHAT::CHANGE_MESSAGE, it’s obviously a lot more clear what’s going on.

Also, if you make a typo and dispatch an undefined action constant, the app will throw an error to alert you of the mistake. If you make a typo with an action type string, the action will fail silently.

Keeping all the action types for a reducer gathered in one place at the top of the file can also help you:

- Keep names consistent

- Quickly understand the reducer API

- See what’s changed in pull requests

7. Use Action Creators to Decouple Action Logic from Dispatch Callers

When I tell people that they can’t generate IDs or grab the current time in a reducer, I get funny looks. If you’re staring at your screen suspiciously right now rest assured: you’re not alone.

So where is a good place to handle impure logic like that without repeating it everywhere you need to use the action? In an action creator.

Action creators have other benefits, as well:

- Keep action type constants encapsulated in your reducer file so you don’t have to import them anywhere else.

- Make some calculations on inputs prior to dispatching the action.

- Reduce boilerplate

Let’s use an action creator to generate the ADD_CHAT action object:

// Action creators can be impure.

export const addChat = ({

// cuid is safer than random uuids/v4 GUIDs

// see usecuid.org

id = cuid(),

msg = '',

user = 'Anonymous',

timeStamp = Date.now()

} = {}) => ({

type: ADD_CHAT,

payload: { id, msg, user, timeStamp }

});

impure-action-creator.js

As you can see above, we’re using cuid to generate random ids for each chat message, and Date.now() to generate the time stamp. Both of those are impure operations which are not safe to run in the reducer — but it’s perfectly OK to run them in action creators.

Reduce Boilerplate with Action Creators

Some people think that using action creators adds boilerplate to the project. On the contrary, you’re about to see how I use them to greatly reduce the boilerplate in my reducers.

Tip: If you store your constants, reducer, and action creators all in the same file, you’ll reduce boilerplate required when you import them from separate locations.

Imagine we want to add the ability for a chat user to customize their user name and availability status. We could add a couple action type handlers to the reducer like this:

const chatReducer = (state = defaultState, action = {}) => {

const { type, payload } = action;

switch (type) {

case ADD_CHAT:

return Object.assign({}, state, {

chatLog: state.chatLog.concat(payload)

});

case CHANGE_STATUS:

return Object.assign({}, state, {

statusMessage: payload

});

case CHANGE_USERNAME:

return Object.assign({}, state, {

userName: payload

});

default: return state;

}

};

reducer-boilerplate.js

For larger reducers, this could grow to a lot of boilerplate. Lots of the reducers I’ve built can get much more complex than that, with lots of redundant code. What if we could collapse all the simple property change actions together?

Turns out, that’s easy:

const chatReducer = (state = defaultState, action = {}) => {

const { type, payload } = action;

switch (type) {

case ADD_CHAT:

return Object.assign({}, state, {

chatLog: state.chatLog.concat(payload)

});

// Catch all simple changes

case CHANGE_STATUS:

case CHANGE_USERNAME:

return Object.assign({}, state, payload);

default: return state;

}

};

chat-reducer-sans-boilerplate.js

Even with the extra spacing and the extra comment, this version is shorter — and this is only two cases. The savings can really add up.

Isn’t switch…case dangerous? I see a fall through!

You may have read somewhere that switch statements should be avoided, specifically so that we can avoid accidental fall through, and because the list of cases can become bloated. You may have heard that you should never use fall through intentionally, because it’s hard to catch accidental fall-through bugs. That’s all good advice, but let’s think carefully about the dangers I mentioned above:

- Reducers are composable, so case bloat is not a problem. If your list of cases gets too large, break off pieces and move them into separate reducers.

- Every case body returns, so accidental fall through should never happen. None of the grouped fall through cases should have bodies other than the one performing the catch.

Redux uses switch..case well. I’m officially changing my advice on the matter. As long as you follow the simple rules above (keep switches small and focused, and return from every case with its own body), switch statements are fine.

You may have noticed that this version requires a different payload. This is where your action creators come in:

export const changeStatus = (statusMessage = 'Online') => ({

type: CHANGE_STATUS,

payload: { statusMessage }

});

export const changeUserName = (userName = 'Anonymous') => ({

type: CHANGE_USERNAME,

payload: { userName }

});

chat-action-creators.js

As you can see, these action creators are making the translation between the arguments, and the state shape. But that’s not all they’re doing…

8. Use ES6 Parameter Defaults for Signature Documentation

If you’re using Tern.js with an editor plugin (available for popular editors like Sublime Text and Atom), it’s going to read those ES6 default assignments and infer the required interface of your action creators, so when you’re calling them, you can get intellisense and autocomplete. This takes cognitive load off developers, because they won’t have to remember the required payload type or check the source code when they forget.

If you’re not using a type inference plugin such as Tern, TypeScript, or Flow, you should be.

Note: I prefer to rely on inference provided by default assignments visible in the function signature as opposed to type annotations, because:

- You don’t have to use a Flow or TypeScript to make it work: Instead you use standard JavaScript.

- If you are using TypeScript or Flow, annotations are redundant with default assignments, because both TypeScript and Flow infer the type from the default assignment.

- I find it a lot more readable when there’s less syntax noise.

- You get default settings, which means, even if you’re not stopping the CI build on type errors (you’d be surprised, lots of projects don’t), you’ll never have an accidental

undefinedparameter lurking in your code.

9. Use Selectors for Calculated State and Decoupling

Imagine you’re building the most complex chat app in the history of chat apps. You’ve written 500k lines of code, and THEN the product team throws a new feature requirement at you that’s going to force you to change the data structure of your state.

No need to panic. You were smart enough to decouple the rest of the app from the shape of your state with selectors. Bullet: dodged.

For almost every reducer I write, I create a selector that simply exports all the variables I need to construct the view. Let’s see what that might look like for our simple chat reducer:

export const getViewState = state => state;

Yeah, I know. That’s so simple it’s not even worth a gist. You might be thinking I’m crazy now, but remember that bullet we dodged before? What if we wanted to add some calculated state, like a full list of all the users who’ve chatted during this session? Let’s call it recentlyActiveUsers.

This information is already stored in our current state — but not in a way that’s easy to grab. Let’s go ahead and grab it in getViewState():

export const getViewState = state => Object.assign({}, state, {

// return a list of users active during this session

recentlyActiveUsers: [...new Set(state.chatLog.map(chat => chat.user))]

});

get-view-state.js

If you put all your calculated state in selectors, you:

- Reduce the complexity of your reducers & components

- Decouple the rest of your app from your state shape

- Obey the single source of truth principle, even within your reducer

10. Use TDD: Write Tests First

Many studies have compared test-first to test-after methodologies, and to no tests at all. The results are clear and dramatic: Most of the studies show between 40–80% reduction in shipping bugs as a result of writing tests before you implement features.

TDD can effectively cut your shipping bug density in half, and there’s plenty of evidence to back up that claim.

While writing the examples in this article, I started all of them with unit tests.

To avoid fragile tests, I created the following factories that I used to produce expectations:

const createChat = ({

id = 0,

msg = '',

user = 'Anonymous',

timeStamp = 1472322852680

} = {}) => ({

id, msg, user, timeStamp

});

const createState = ({

userName = 'Anonymous',

chatLog = [],

statusMessage = 'Online',

currentChat = createChat()

} = {}) => ({

userName, chatLog, statusMessage, currentChat

});

chat-reducer-factories.js

Notice these both provide default values, which means I can override properties individually to create only the data I’m interested in for any particular test.

Here’s how I used them:

describe('chatReducer()', ({ test }) => {

test('with no arguments', ({ same, end }) => {

const msg = 'should return correct default state';

const actual = reducer();

const expected = createState();

same(actual, expected, msg);

end();

});

});

test-default-state.js

Note: I use tape for unit tests because of its simplicity. I also have 2–3 years’ experience with Mocha and Jasmine, and miscellaneous experience with lots of other frameworks. You should be able to adapt these principles to whatever framework you choose.

Note the style I’ve developed to describe nested tests. Probably due to my background using Jasmine and Mocha, I like to start by describing the component I’m testing in an outer block, and then in inner blocks, describe what I’m passing to the component. Inside, I make simple equivalence assertions which you can do with your testing library’s deepEqual() or toEqual() functions.

As you can see, I use isolated test state and factory functions instead of utilities like beforeEach() and afterEach(), which I avoid because they can encourage inexperienced developers to employ shared state in the test suite (that’s bad).

As you’ve probably guessed, I have three different kinds of tests for each reducer:

- Direct reducer tests, which you’ve just seen an example of. These essentially test that the reducer produces the expected default state.

- Action creator tests, which test each action creator by applying the reducer to the action using some predetermined state as a starting point.

- Selector tests, which tests the selectors to ensure that all expected properties are there, including computed properties with expected values.

You’ve already seen a reducer test. Let’s look at some other examples.

Action Creator Tests

describe('addChat()', ({ test }) => {

test('with no arguments', ({ same, end}) => {

const msg = 'should add default chat message';

const actual = pipe(

() => reducer(undefined, addChat()),

// make sure the id and timestamp are there,

// but we don't care about the values

state => {

const chat = state.chatLog[0];

chat.id = !!chat.id;

chat.timeStamp = !!chat.timeStamp;

return state;

}

)();

const expected = Object.assign(createState(), {

chatLog: [{

id: true,

user: 'Anonymous',

msg: '',

timeStamp: true

}]

});

same(actual, expected, msg);

end();

});

test('with all arguments', ({ same, end}) => {

const msg = 'should add correct chat message';

const actual = reducer(undefined, addChat({

id: 1,

user: '@JS_Cheerleader',

msg: 'Yay!',

timeStamp: 1472322852682

}));

const expected = Object.assign(createState(), {

chatLog: [{

id: 1,

user: '@JS_Cheerleader',

msg: 'Yay!',

timeStamp: 1472322852682

}]

});

same(actual, expected, msg);

end();

});

});

action-creator-test.js

This example is interesting for a couple of reasons. The addChat() action creator is not pure. That means that unless you pass in value overrides, you can’t make a specific expectation for all the properties produced. To deal with this, we used a pipe, which I sometimes use to avoid creating extra variables that I don’t really need. I used it to ignore the generated values. We still make sure they exist, but we don’t care what the values are. Note that I’m not even checking the type. We’re trusting type inference and default values to take care of that.

A pipe is a functional utility that lets you shuttle some input value through a series of functions which each take the output of the previous function and transform it in some way. I use lodash pipe from lodash/fp/pipe, which is an alias for lodash/flow. Interestingly, pipe() itself can be created with a reducer function:

const pipe = (...fns) => x => fns.reduce((v, f) => f(v), x);

const fn1 = s => s.toLowerCase();

const fn2 = s => s.split('').reverse().join('');

const fn3 = s => s + '!'

const newFunc = pipe(fn1, fn2, fn3);

const result = newFunc('Time'); // emit!

pipe.js

I tend to use pipe() a lot in the reducer files as well to simplify state transitions. All state transitions are ultimately data flows moving from one data representation to the next. That’s what pipe() is good at.

Note that the action creator lets us override all the default values, too, so we can pass specific ids and time stamps and test for specific values.

Selector Tests

Lastly, we test the state selectors and make sure that computed values are correct and that everything is as it should be:

describe('getViewState', ({ test }) => {

test('with chats', ({ same, end }) => {

const msg = 'should return the state needed to render';

const chats = [

createChat({

id: 2,

user: 'Bender',

msg: 'Does Barry Manilow know you raid his wardrobe?',

timeStamp: 451671300000

}),

createChat({

id: 2,

user: 'Andrew',

msg: `Hey let's watch the mouth, huh?`,

timeStamp: 451671480000 }),

createChat({

id: 1,

user: 'Brian',

msg: `We accept the fact that we had to sacrifice a whole Saturday in

detention for whatever it was that we did wrong.`,

timeStamp: 451692000000

})

];

const state = chats.map(addChat).reduce(reducer, reducer());

const actual = getViewState(state);

const expected = Object.assign(createState(), {

chatLog: chats,

recentlyActiveUsers: ['Bender', 'Andrew', 'Brian']

});

same(actual, expected, msg);

end();

});

});

state-selector-test.js

Notice that in this test, we’ve used Array.prototype.reduce() to reduce over a few example addChat() actions. One of the great things about Redux reducers is that they’re just regular reducer functions, which means you can do anything with them that you’d do with any other reducer function.

Our expected value checks that all our chat objects are in the log, and that the recently active users are listed correctly.

Not much else to say about that.

Redux Rules

If you use Redux correctly, you’re going to get major benefits:

- Eliminate timing dependency bugs

- Enable deterministic view renders

- Enable deterministic state reproduction

- Enable easy undo/redo features

- Simplify debugging

- Become a time traveler

But for any of that to work, you have to remember some rules:

- Reducers must be pure functions

- Reducers must be the single source of truth for their state

- Reducer state should always be serializable

- Reducer state should not contain functions

Also keep in mind:

- Some Apps don’t need Redux

- Use constants for action types

- Use action creators to decouple action logic from dispatch callers

- Use ES6 parameter defaults for self-describing signatures

- Use selectors for calculated state and decoupling

- Always use TDD!

Enjoy!

Are You Ready to Level Up Your Redux Skills with DevAnywhere?

Learn advanced functional programming, React, and Redux with 1:1 mentorship. Lifetime Access members, check out the functional programming and Redux lessons. Be sure to watch the Shotgun series & ride shotgun with me while I build real apps with React and Redux.

Suggest:

☞ Netflix Clone with React, Redux Toolkit, Nodejs, Firebase, Express, MongoDB(Complete Frontend+API)

☞ 3 Ways improve Redux Reducers

☞ Redux vs MobX: Which Is Best for Your Project?

☞ How React Context can come to the rescue if you don’t know Redux

☞ A Deep Dive into React Redux

☞ Redux Toolkit Tutorial – JavaScript State Management Library