Python ETL Tools: Best 8 Options

Want to do ETL with Python? Here are 8 great libraries and a hybrid option

ETL is the process of fetching data from one or many systems and loading it into a target data warehouse after doing some intermediate transformations. The market has various ETL tools that can carry out this process.

Some tools offer a complete end-to-end ETL implementation out of the box and some tools help you to create a custom ETL process from scratch and there are a few options that fall somewhere in between. In this post, we will see some commonly used Python ETL tools and understand in which situations they may be a good fit for your project.

Before going through the list of Python ETL tools, let’s first understand some essential features that any ETL tool should have.

Features of ETL Tools

ETL stands for Extract, Transform, and Load and so any ETL tool should be at least have the following features:

Extract

This is the process of extracting data from various sources. A good ETL tool supports various types of data sources. This should include most databases (both NoSQL and SQL-based) and file formats like csv, xls, xml, and json.

Transform

The extracted data is usually kept in a staging area where raw data is cleansed and transformed into a meaningful form for storing it in a data warehouse. A standard ETL tool supports all the basic data transformation features like row operations, joins, sorting, aggregations, etc.

Load

In the load process, the transformed data is loaded into the target warehouse database. The standard ETL tools support connectors for various databases like Snowflake, MS SQL, and Oracle.

Other Add-On Features

Apart from basic ETL functionality, some tools support additional features like dashboards for visualizing and tracking various ETL pipelines. In fact, besides ETL, some tools also provide the ability to carry out parallel or distributed processing, and in some cases even basic analytics, that can be good add-ons depending on your project requirement.

Python ETL Tools

Python is a programming language that is relatively easy to learn and use. Python has an impressively active open-source community on GitHub that is churning out new Python libraries and enhancement regularly. Because of this active community and Python’s low difficulty/functionality ratio, Python now sports an impressive presence in many diverse fields like game development, web development, application developments, NLP, and computer vision, just to name the few.

In recent years, Python has become a popular programming language choice for data processing, data analytics, and data science (especially with the powerful Pandas data science library). So it should not come as a surprise that there are plenty of Python ETL tools out there to choose from. Let’s take a look at the most common ones.

Petl

Petl (stands for Python ETL) is a basic tool that offers the standard ETL functionality of importing data from different sources (like csv, XML, json, text, xls) into your database. It is trivial in terms of features and does not offer data analytics capabilities like some other tools in the list. However, it does support all the standard transformations like row operation, sorting, joining, and aggregation.

Petl isn’t bad for a simple tool, but it can suffer from performance issues; especially compared to some of the other options out there. So if you just need to build a simple ETL pipeline and performance is not a big factor, then this lightweight tool should do the job. But for anything more complex or if you expect the project to grow in scope, you may want to keep looking.

Pandas

Logo for Pandas, a Python library useful for ETL

Pandas is one of the most popular Python libraries nowadays and is a personal favorite of mine. I’ve used it to process hydrology data, astrophysics data, and drone data. Its rise in popularity is largely due to its use in data science, which is a fast-growing field in itself, and is how I first encountered it.

Pandas use dataframes as the data structure to hold the data in memory (similar to how data is handled in the R programming language) Apart from regular ETL functionalities, Pandas supports loads of data analytics and visualization features.

Pandas is relatively easy to use and has many rich features, which is why it is a commonly used tool for simple ETL and exploratory data analysis by data scientists. If you are already using Pandas it may be a good solution for deploying a proof-of-concept ETL pipeline.

Mara

Mara is a Python ETL tool that is lightweight but still offers the standard features for creating an ETL pipeline. It also offers other built-in features like web-based UI and command line integration. Web UI helps to visualize the ETL pipeline execution, which can also be integrated into a Flask based app. It uses PostgreSQL as the data processing engine.

If you are looking for an ETL tool that is simple but still has a touch of sophisticated features then Mara can be a good choice.

Airflow

Logo for Apache Airflow

Apache Airflow was created by Airbnb and is an open source workflow management tool. It can be used to create data ETL pipelines. Strictly speaking, it is not an ETL tool itself, instead, it is more of an orchestration tool that can be used to create, schedule, and monitor workflows. This means you can use Airflow to create a pipeline by consolidating various independently written modules of your ETL process.

Airflow workflow follows the concept of DAG (Directed Acyclic Graph). Airflow, like other tools in the list, also has a browser-based dashboard to visualize workflow and track execution of multiple workflows. Airflow is a good choice if you want to create a complex ETL workflow by chaining independent and existing modules together

Pyspark

PySpark Logo

Pyspark is the version of Spark which runs on Python and hence the name. As per their website, “Spark is a unified analytics engine for large-scale data processing.”

The Spark core not only provides robust features for creating ETL pipelines but also has support for data streaming (Spark Streaming), SQL (Spark SQL), machine learning (MLib) and graph processing (Graph X).

The main advantage of using Pyspark is the fast processing of huge amounts data. So if you are looking to create an ETL pipeline to process big data very fast or process streams of data, then you should definitely consider Pyspark. That said, it’s not an ETL solution out-of-the-box, but rather would be one part of your ETL pipeline deployment.

Bonobo

Logo for Bonobo Python ETL tool

Bonobo is a lightweight ETL tool built using Python. It is simple and relatively easy to learn. It uses the graph concept to create pipelines and also supports the parallel processing of multiple elements in the pipeline. It also has a visual interface where the user can track the progress of the ETL pipeline.

All in all, it is just another easy-to-use ETL Python tool, that may be a good option for simple use-cases, but doesn’t have a lot of stand out features that separate it from the pack.

(And yes, we have both Pandas and Bonobos in this list)

Luigi

Logo for the Luigi Python ETL tool

Luigi is a Python-based ETL tool that was created by Spotify but now is available as an open-source tool. It is a more sophisticated tool than many on this list and has powerful features for creating complex ETL pipelines. As per their Github page, “It handles dependency resolution, workflow management, visualization, handling failures, command line integration, and much more”.

It also comes with a web dashboard to track all the ETL jobs. If you are looking to build an enterprise solution then Luigi may be a good choice.

Odo

Odo is a Python tool that can convert data from one format to another. But its main noteworthy feature is the performance it gives when loading huge csv datasets into various databases.

As they describe it on their website: “Odo uses the native CSV loading capabilities of the databases it supports. These loaders are extremely fast. Odo will beat any other pure Python approach when loading large datasets.”

I haven’t done a performance test to verify these claims, but if anyone has, please share in the comments. But regardless, it’s use-case seems clear: if you are looking to create a simple pipeline where the focus is just to load huge csv datasets into your data warehouse, then you should give Odo a try.

Hybrid Option

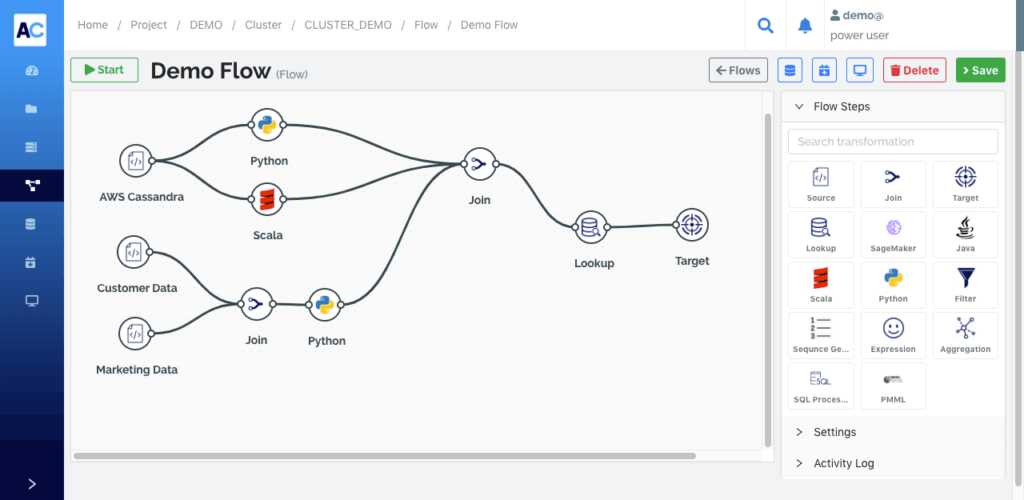

As an alternative to going pure Python in your ETL deployment, you may want to take a look at Avik Cloud. Avik Cloud is an Apache Spark-based ETL platform where you can visually build out your ETL pipeline in their Flow Builder. Here’s the thing, Avik Cloud lets you enter Python code directly into your ETL pipeline.

The Avik Cloud flowbuilder ETL tool where you can directly add Python code

The one drawback is that, unlike the Python libraries, Avik Cloud is a SAAS product and charges licensing fees. The good part is that their pricing structure is based on the pricing practices of cloud providers like AWS, Google Cloud, and Azure, and only charges for usage.

Conclusion

As you saw, there are plenty of Python ETL tools to choose from and each brings its own set of features and drawbacks. Whether you are looking for just standard ETL functionality or if you are looking for more add-on features and sophistication, Python may be a good choice. If you’re looking to build out an enterprise solution with more moving parts, it may be worth looking at a hybrid solution like Avik Cloud, that allows you to do Python transformations in your ETL pipeline but still easily connect to other datasources and products.

Suggest:

☞ Python Tutorials for Beginners - Learn Python Online

☞ Learn Python in 12 Hours | Python Tutorial For Beginners

☞ Complete Python Tutorial for Beginners (2019)

☞ Python Programming Tutorial | Full Python Course for Beginners 2019